What is synthetic monitoring?

Synthetic monitoring is an active monitoring practice that simulates user interactions with a system. This can be in the form of an app or website, or any type of software that is exposed to end users. The data generated from the simulated interactions is collected and analyzed to evaluate how the users behave and how the system responds to users’ input and actions. By simulating user actions and workflows, organizations can proactively validate and monitor their applications’ behavior and performance.

A common use of synthetic website monitoring is to understand and optimize a website, but synthetic monitoring is a much wider practice, encompassing many areas. It is used to emulate desktop/OS operations, for image pattern recognition, or to perform HTTP checks over a network. This type of monitoring is done through the use of synthetic tests: a series of scripts and configuration files that tell the monitoring tool how to perform synthetic testing.

These are of great usefulness for IT administrators in testing all possible use cases that a piece of software, or more generally a system, is subject to, and seeing if there is room for optimization, reasons for changes, or actions that need to be taken to fix issues. In this context, synthetic monitoring is especially valuable to monitor complex transactions, such as multi-step processes like form submissions or shopping cart checkouts, helping ensure that every critical workflow performs as expected.

Synthetic monitoring is also referred to as proactive monitoring as it does not wait for events to happen but creates scenarios to verify how the system behaves. It can validate systems’ performance and availability in an active manner. In contrast, classic monitoring efforts that analyze only real data are referred to as passive monitoring.

TL;DR:

Synthetic monitoring simulates user interactions with systems to proactively test performance, availability, and functionality before real users are affected.

- Synthetic tests are used to emulate user behavior on websites, apps, or other software, providing insights into system response and potential issues.

- Types of synthetic monitoring include uptime checks, website navigation tests, and transaction monitoring, often run from multiple locations to ensure global performance.

How does synthetic monitoring work?

Synthetic monitoring typically relies on a robot client that executes automated scripts simulating the end user’s clickstream through the application. Broadly speaking, the scripts, or synthetic tests, are created by QA (quality assurance) engineers to test a website, application, or system. Depending on the adopted framework and synthetic monitoring tool, these scripts take many forms and syntaxes. Synthetic monitoring solutions execute the tests and report on their results to the monitoring tool. The data is analyzed to assess the system’s behavior. If a need is identified, the system is improved or modified accordingly. New synthetic tests are written to further verify the changes and possibly continue improving the quality of the system.

Often synthetic testing comes in the form of an automated task that would typically be performed by engineers. It is normally also possible to run synthetic tests manually, even if it is rarer. Most occurrences of manual intervention happen when tests are updated to reflect changes in the monitored systems’ behavior and UI.

Synthetic monitoring has synthetic testing at its core, as it all revolves around correctly writing and executing the tests for your own use cases. In most synthetic monitoring solutions, there are synthetic tests possible for a myriad of scenarios, with a wide coverage of any company’s needs, within the limits of what the vendor has built into the software. Whether you need to test an end-user clickstream through your e-commerce platform, or monitor shopping transactions or even online gaming, synthetic tests are possible.

What are the types of synthetic monitoring?

Synthetic monitoring can take different forms depending on what needs to be tested — from simple uptime checks to advanced transaction validation and web performance monitoring. Each type serves a specific purpose, whether it’s ensuring basic availability, verifying website performance, or monitoring business-critical workflows. Below are the main types of synthetic monitoring along with their typical use cases.

Uptime monitoring

Also known as availability monitoring, it helps to track the availability and responsiveness of a web application. Synthetic tests may involve simply using a ping or a GET request to specific resources to test their accessibility, or creating multiple concurrent requests to monitor the responsiveness and performance of your web application.

As part of availability monitoring, synthetic tests can also be written for non-HTTP services (such as mail or database servers) by establishing a TCP connection to a specified host and port, measuring latency, and verifying the expected response.

Uptime monitoring also extends to API monitoring, where synthetic API tests verify that endpoints respond correctly, measure response times, ensuring backend services and integrations remain reliable.

Synthetic website monitoring

As the name suggests, synthetic website monitoring tests the availability and navigability of a website. It can include not only simple uptime checks but also more complex scenarios that simulate a user navigating through the site. With this type of monitoring, response times, page load times, and other performance metrics can be measured and analyzed.

Synthetic transaction monitoring

Synthetic transaction monitoring goes beyond synthetic website monitoring and is broader. It is used to test both websites and web applications to ensure bug-free functionality and smooth, error-free workflows. Typical use cases include validating form submissions, verifying login processes, and checking that business-critical operations — such as shopping cart checkouts — are working as expected.

These tests are often run at regular intervals from servers around the world to guarantee the continuous availability of critical functions. In addition, monitoring third-party services is essential to ensure that external integrations do not negatively affect overall performance.

Real user monitoring vs synthetic monitoring: what is the difference?

A word must be said on real user monitoring vs. synthetic monitoring. The terms are often confused. Real user monitoring is based on the actual data and interactions performed by end users on a system, regardless of what that is. It is therefore real-world data, unlike synthetic monitoring, which is computer-generated, a simulation of the possible behavior of real users.

Thus, synthetic vs real user monitoring do not differ in their final objective, which is still monitoring a system to ensure its optimal performance and operability, but they do use completely different datasets. It is not necessary to choose between real user monitoring vs synthetic monitoring, though. Both can be, and are, implemented at the same time, even by the same tools. Sometimes synthetic monitoring is set up only on systems that are in the testing phase, not yet in production; sometimes it is run in parallel with real user monitoring on systems that are live already. The choice falls on engineers, and your specific reasons for monitoring.

Historical data collected from both synthetic and real user monitoring not only complements each other but is also valuable for benchmarking, identifying trends, and analyzing performance improvements over time.

Setting up synthetic monitoring

Establishing an effective synthetic monitoring system begins with identifying the most critical user interactions and critical processes within your application. Focus on high-impact user journeys, such as login flows, payment gateways, and other essential transactions that directly affect customer satisfaction and business outcomes. Once these key areas are mapped, select a synthetic monitoring tool that fits your organization’s requirements and budget, ensuring it supports the types of synthetic tests you need.

Next, configure your synthetic monitoring system to simulate user interactions from different geographical locations and across different user devices. Set clear performance thresholds and define alerting criteria so your IT team is promptly notified of any anomalies or performance issues.

It’s also crucial to regularly review and update your synthetic monitoring scripts to keep pace with changes in your application and shifts in typical user behavior.

Integrating synthetic monitoring with CI/CD pipelines

Integrating synthetic monitoring into your CI/CD pipelines is a proactive approach that helps catch performance issues before they impact real users. By embedding synthetic tests into your continuous integration and delivery workflows, you can automatically validate your application’s performance with every code change or deployment. This makes it possible to detect regressions, broken user flows, or slow response times early in the development cycle.

Most modern CI/CD tools, such as Jenkins or GitLab CI/CD, support the automation of synthetic tests as part of your build and deployment process. You can also leverage APIs to connect synthetic monitoring tools directly with your pipeline, enabling seamless execution and real-time feedback.

Dedicated, proactive monitoring solutions like Robotmk extend this concept further by allowing synthetic tests to become part of your monitoring environment as well as your delivery pipeline.

Integrating synthetic monitoring directly into CI/CD creates a continuous feedback loop that not only safeguards application performance but also accelerates development cycles and fosters a culture of quality and reliability.

Location-based synthetic monitoring

Location-based synthetic monitoring enables you to run tests from different environments to evaluate your application’s performance under varying regional and network conditions. To achieve this, you can distribute tests across multiple sites within your infrastructure or cloud environments, allowing you to identify latency, connectivity, or performance issues that may not be visible from a single location. This setup helps you ensure consistent availability and responsiveness for users across networks and devices.

As an example, Robotmk makes this possible by allowing the deployment of test agents or schedulers in multiple locations — such as branch offices or cloud instances — so that synthetic tests accurately reflect the experience of a global audience connecting from those environments.

What are the benefits of synthetic monitoring?

There are clear benefits to implementing synthetic monitoring. It not only helps validate performance and stability before systems go live, but also ensures reliability and user satisfaction throughout the application’s lifecycle.

Performance validation

One of the most immediate advantages is the ability to validate a system’s performance. In what is sometimes referred to as synthetic performance monitoring, tests are designed to evaluate how a system behaves under stress and to determine whether modifications are required before deployment. Even after release, these tests continue to play a critical role by monitoring overall performance and ensuring that changes do not negatively impact end users or internal workloads.

By capturing detailed insights into system behavior, synthetic monitoring also enables early detection of potential issues and makes it easier to identify the root cause of errors or degradation.

Benchmarking and baselining

Synthetic transaction monitoring also makes it possible to establish a benchmark of expected performance. By observing how an application responds under a controlled series of synthetic tests, teams can set a baseline for normal conditions. Once the application is in production, its performance can be compared against this benchmark, providing immediate visibility into any decline in responsiveness or reliability.

Accessibility testing

Another important benefit lies in accessibility monitoring. By simulating the behavior of users who rely on specific accessibility features, synthetic tests can reveal how inclusive and user-friendly a system or interface truly is. This perspective often highlights issues that may otherwise remain unnoticed during standard testing or through real user data.

Global user experience

Synthetic monitoring also enables teams to replicate the experience of users who are geographically distant from the datacenter. Developers and QA engineers can simulate typical request patterns, or configure tests to reflect routing conditions that mimic how users in a particular region would access the system. This makes it possible to uncover latency, connectivity, or performance issues that may vary significantly across different parts of the world.

Compliance and SLAs

Finally, synthetic monitoring contributes to compliance with service level agreements by providing objective performance measurements. These metrics help organizations verify that they are meeting their reliability and performance commitments, while also offering concrete evidence to stakeholders and customers that agreed standards are consistently maintained.

Synthetic monitoring main challenges

Synthetic monitoring is not without its challenges. For instance, many of the difficulties in setting up a synthetic monitoring system lie in preparing the right synthetic tests. This becomes even more complex when trying to achieve true end-to-end monitoring across a mix of cloud and on-premises assets. For instance, in many infrastructures nowadays, a mix of cloud and on-premises assets are used. The larger the distributed environment is, the more challenging it is for DevOps or engineering teams to simulate every possible scenario.

Errors can be missed if a certain location or situation is not predicted and tested accordingly. Without previously knowing with accuracy the path users take on your web app, for example, synthetic testing becomes a guessing game. Some work should be done before starting to implement synthetic monitoring software.

Synthetic monitoring requires a specific skill set from engineers, and not all are knowledgeable about it. Different testing languages and frameworks use slightly different ways to write tests and establish a working synthetic monitoring system. Without already having specialized knowledge in your teams, monitoring synthetically may become difficult to set up and time-consuming.

Following even minor UI changes in client-facing applications can cause testing scripts to fail, triggering alerts and notifications and the inevitable bouts of worry for system administrators. It is important to have a good alerting/notification setup that avoids needless triggering, causing “alert fatigue.” While resolving most failures is often trivial, synthetic monitoring still has a long way to go before claiming to be a “set it once and forget it” solution.

Conclusion

Despite the challenges, it is clear that synthetic monitoring is an incredibly useful tool in the arsenal of companies that want to optimize the reliability of their infrastructure. Monitoring tools have been moving towards integrating it or pairing up with specific synthetic monitoring frameworks. Checkmk is no exception.

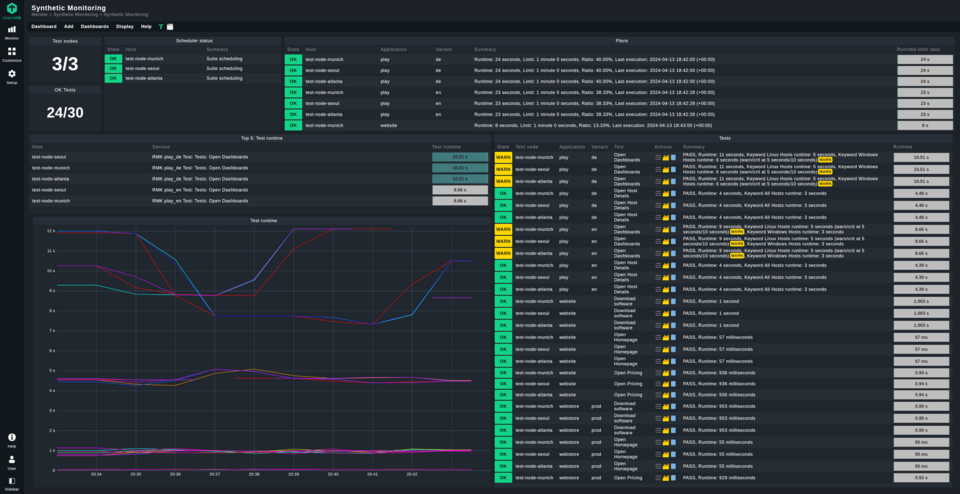

With Robotmk, Checkmk brings the power of the Robot Framework into the already automation-heavy features of Checkmk Cloud and Enterprise. Following up on the results of synthetic tests from Checkmk is now as easy as setting up a normal monitoring agent, with integrated views into the general Checkmk dashboards — a step further towards making synthetic monitoring nearly effortless.