All you need to know about container orchestration with Kubernetes

Containerized applications have changed the way companies are developing modern software. Containers provide a lot of flexibility for running cloud-native applications. Containers package up and encapsulate all the services and components of an entire application, making it portable across different environments. However, managing hundreds of containers over a distributed environment becomes a challenge. This is where container orchestration tools come into play.

Although there are many container orchestration tools, like Docker Swarm or Apache Mesos, Kubernetes has become one of the best platforms for container orchestration. Tech giants use Kubernetes to achieve scalability, portability, resilience, and better resource utilization in their applications. The CNCF Report 2022 confirms the broad adoption of Kubernetes by organizations all around the globe. Through Kubernetes, you can automate many container management and application deployment tasks.

This article describes container orchestration with Kubernetes and compares different Kubernetes distributions.

What is container orchestration?

Container orchestration automates the lifecycle management of containers across large, dynamic environments. It includes provisioning, deployment, networking, scaling, availability, and health monitoring.

Container orchestration also helps manage the connectivity between containers and the underlying infrastructure and provides monitoring and logging capabilities to ensure that applications run smoothly.

Overall, container orchestration helps automate containerized applications' deployment and management, which enables organizations to run and scale their applications in different environments quickly.

How does container orchestration work with Kubernetes?

A basic Kubernetes setup consists of a master node for central control and management, worker nodes for running containers, a network for communication between nodes and pods, a container runtime, and the Kubernetes API. The Kubernetes API serves as the crucial backbone of the Kubernetes ecosystem. It enables communication between various components and allows users to define, manage, and control their containerized applications. The Kubernetes API facilitates extensibility and integration with external tools, which makes the entire Kubernetes platform more robust, customizable, and adaptable to diverse use cases.

As an orchestration platform, Kubernetes has the great advantage of being able to manage a large number of containers. The feature set includes automatic monitoring, deployment, repair, copying and migration of containers. If you want to learn more about Kubernetes architecture and its main objects, read our blog article "What is Kubernetes?”.

What are Kubernetes distributions?

A Kubernetes distribution is a preconfigured package for Kubernetes, provided by commercial vendors or open source communities. Kubernetes distributions are intended to simplify the configuration and management of Kubernetes in production environments.

While you need to configure and deploy the original (vanilla) Kubernetes yourself, which requires expertise, Kubernetes distributions provide a number of additional components and features in addition to the core elements that make Kubernetes easier to deploy, scale, and maintain.

Among other things, they often enable easy-to-use setup of Kubernetes clusters on the various cloud platforms or bare-metal servers. Additional features and components of distributions include ingress controllers, container network interface (CNI) plugins, persistent volume resources, but also integrations with other tools such as monitoring solutions or log analysis platforms. At the same time, many distributions include security and compliance features to increase the protection of the cluster, as well as maintenance mechanisms, for example, to simplify updating to new Kubernetes versions.

What are the different types of Kubernetes distributions?

There are also different types of Kubernetes distributions. These can differ depending on their purpose, intended use, and feature set. For example, some Kubernetes distributions can be hosted on-premises, run in the cloud, or are available as a cloud service. In addition, the level of self-management varies between Kubernetes distributions.

With self-managed Kubernetes, the user is responsible for running the cluster, managing the operating system on the nodes, software upgrades, backups and other maintenance tasks. However, the user has full control over the cluster and its configuration, allowing them to tweak the cluster as needed.

With Managed Kubernetes, a cloud provider or service provider maintains the cluster infrastructure and performs upgrades, backups, and other maintenance tasks. The user, on the other hand, is responsible for the Kubernetes applications. The user has limited control over the clusters. Vendors typically provide some level of support for managed Kubernetes.

In summary, self-managed Kubernetes offers more control and customization, but also requires more expertise and effort to set up and maintain, while managed Kubernetes offers less control, but more support and ease of use.

Advantages of self-managed Kubernetes

Some of the advantages of self-managed Kubernetes are the following:

Control: You have complete control of your cluster, where you can configure everything according to your requirements. Compliance and regulatory obligations often require you to use your infrastructure instead of shared infrastructure on the public cloud.

Customization/flexibility: Self-managed Kubernetes gives you more flexibility over your cluster's setup, deployment, and management. You can customize the cluster to your needs by selecting the hardware, networking, and storage solutions.

Cost-effectiveness: Because of its flexibility, a self-managed Kubernetes environment offers many opportunities for cost control. With freedom of choice, organizations can obtain affordable infrastructure and compute resources for their Kubernetes clusters from cloud providers or local data centers, and tailor their Kubernetes deployments to reduce licensing costs, for example.

Considerations for using self-managed Kubernetes

While self-managed Kubernetes offers greater flexibility and adaptability, it requires specialized knowledge of Kubernetes administration, infrastructure, and security, as well as a significant investment in resources and maintenance.

DevOps engineers must regularly maintain and update the cluster, and organizations must ensure they have sufficient resources to handle workloads and scale the cluster. Maintaining a self-managed Kubernetes cluster requires regular monitoring, troubleshooting, and data backups to ensure data resiliency.

Despite the increased overhead, however, self-managed Kubernetes may be attractive to organizations that have specific needs not covered by a managed Kubernetes service.

Advantages of managed Kubernetes

Managed Kubernetes has its own set of advantages, such as:

Focusing on business features: Managed Kubernetes abstracts away the complexity of managing infrastructure, networking, and storage. It frees development teams from managing the underlying infrastructure so they can focus on building applications. Faster development and deployment reduces time to market and development costs.

Easier maintenance: With managed Kubernetes services, organizations do not have to worry about managing and maintaining the Kubernetes cluster. The cloud provider handles infrastructure deployment, configuration, management, upgrades, patching, and scalability. This enables enterprises to concentrate on creating and delivering their applications faster.

Scalability and reliability: Managed Kubernetes solutions provide automatic scaling, high availability, and impressive service level agreements (SLAs), which makes your application more reliable and scalable.

Integration with other services: Managed Kubernetes solutions provide strong integration with other companion services offered by the vendor. Some examples include CI/CD pipelines, databases, monitoring tools, etc.

Considerations when using managed Kubernetes

Implementing a managed Kubernetes service can bring significant benefits to an organization. At the same time, however, it requires thorough planning and preparation to enable a smooth implementation and ensure that business needs are met.

Organizations with strict compliance standards may have concerns about cloud providers' security and compliance certifications. Choosing Kubernetes managed by a cloud service provider can also mean lock-in to a provider and its ecosystem, as the tight integration of services limits options for switching providers or multi-cloud approaches. Additionally, the lack of control over the master nodes can be problematic for organizations that require more customization or control.

Finding the right Kubernetes distribution

There are many factors that go into choosing the right Kubernetes distribution for your organization, and they also need to be tailored to individual needs.

Identifying your use case

The first step in selecting a Kubernetes orchestration tool is for the organization to identify the business requirements, or use case. The following factors should be considered:

Type of application: Will the application run in a hybrid or multi-cloud environment? Is specific integration required? For example, GKE is suitable for AI-intensive applications, as it offers strong integration with TensorFlow and TPUs.

Scalability: What scaling options does the business need for its applications? Managed Kubernetes solutions from a cloud provider have the advantage of built-in scaling options. The various Kubernetes distributions can differ significantly when it comes to scaling. For example, some distributions offer efficient, automated scaling of nodes based on the needs of the cluster, while others do not.

Resource requirements: Any particular requirements towards resources like high CPU, high I/O, etc.?

Availability: Is integrated high availability or fault tolerance required?

Is the tool compatible with the existing infrastructure?

The next step is to ensure that the tool is compatible with the existing infrastructure and applications. To do this, the tool should be

- compatible with existing operating systems,

- fit the existing virtualization or containerization model,

- support existing integrations, and

- be compatible with the network infrastructure.

Ease of use

Among other things, the evaluation includes answering the question of how easy it is to work with the desired solution. Some important points in this context are

- Does the tool require a long learning process?

- Is the tool's interface user-friendly?

- Does it require much effort and skill to set up and maintain?

- Does the tool support automating different tasks?

Support

Support is another essential factor when choosing the right orchestration tool. Some factors to consider are:

- Does the tool support automatic software updates?

- Does the tool provide SLA-based support?

- Does the tool provide technical support 24/7?

- How much community support is available?

Cost

Other aspects are related to costs. Companies should consider the following things when choosing their preferred Kubernetes solution:

- What are the licensing costs for the tool?

- How much cost will be required to train the staff?

- What are the associated infrastructure costs?

- What are the cloud-related costs associated with this tool?

When evaluating the right Kubernetes platform, monitoring the Kubernetes infrastructure is another point that should not be ignored. Without monitoring, you will only get rudimentary or limited insights into the health of the Kubernetes clusters and their resource utilization. Therefore, the rest of this article will focus on this aspect.

Why monitor Kubernetes?

Monitoring a Kubernetes infrastructure is essential for detecting and fixing any issue promptly. Kubernetes is a complex solution with many components and services working simultaneously. The malfunctioning of a single service or part can break an application.

Note that there are no integrated monitoring solutions with enterprise features for Kubernetes, so you will need to add additional monitoring software to the default Kubernetes installation.

The situation is different for managed Kubernetes services, which have monitoring tools provided by the respective cloud provider. However, these are limited to the provider's cloud environment.

For this reason, it is reasonable to use a third-party monitoring solution that can run on the cluster and is portable across environments and providers.

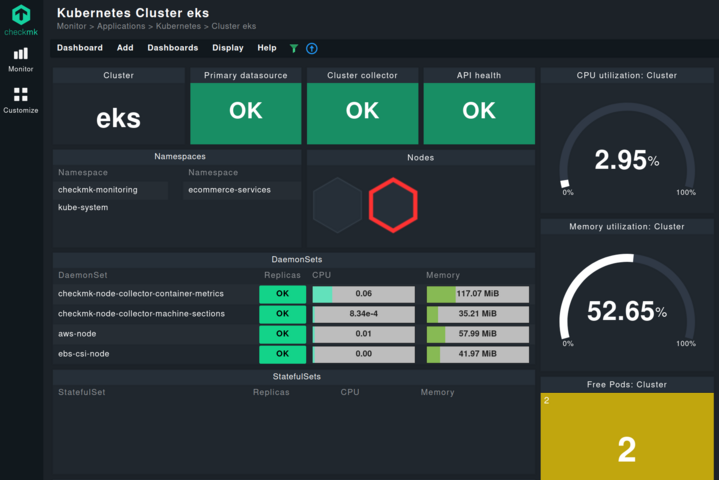

One such Kubernetes monitoring solution is Checkmk. Checkmk not only monitors the resource utilization and overall health of all Kubernetes objects, but also provides real-time insights into cluster performance. Thanks to its intuitive, interconnected Kubernetes dashboard, you can analyze your Kubernetes environment from the cluster down to the pod level with just a few clicks.

Through proper monitoring, you can achieve cost optimization, security across all layers, performance tuning, etc. Implementing a proactive approach to monitoring is crucial.

When monitoring Kubernetes, keep an eye on the following key metrics:

- CPU, IO, and memory used by different processes.

- Processes being executed in the running containers.

- Container restarts.

- Spikes in the CPU or memory of one or more containers.

- Container size and output of other containers.

Final thoughts

This article discussed different Kubernetes installations, including vanilla, cloud-managed, and self-managed Kubernetes. We discussed each option's pros and cons and the use cases for each type. Different factors decide the selection of the right Kubernetes solution, like application type, scalability, resources, and availability needs.

Vanilla Kubernetes is the "pure", unmodified version of Kubernetes from the open source project. It allows complete control and therefore the most flexibility in customizing Kubernetes. However, this requires a deep knowledge of Kubernetes and its components, so Vanilla is best suited for experienced DevOps teams or technically savvy individuals and organizations.

Managed Kubernetes distributions are versions of Kubernetes that are pre-configured and managed by cloud providers or specialized service providers such as AKS, EKS, or GKE. The providers take care of the deployment, maintenance and scaling of the Kubernetes clusters. This allows development teams, for example, to focus on developing applications, as they are relieved of managing the infrastructure.

A self-managed Kubernetes distribution is a version where the user is responsible for deploying, configuring, maintaining, and managing the Kubernetes cluster. As such, it also requires expertise and resources, but offers the most customization options. As such, a self-managed Kubernetes distribution is suitable for organizations with Kubernetes expertise and the infrastructure to support it.

Regardless of Kubernetes type, Kubernetes orchestration requires proactive monitoring of the clusters and their components. Solutions like Checkmk can provide a comprehensive monitoring with data-rich dashboards, real-time alerts, and valuable insights into the health of Kubernetes.