SNMP and other monitoring protocols

A frequent question that pops up when thinking about how to monitor an infrastructure is: What protocol should I use? And often the next is: SNMP or another protocol?

There are indeed quite a few modern network protocols that vie in the area of monitoring. Or generally in managing and configuring hosts. Without going into a true comparison of vastly different network protocols, we instead want to present a few that are utilized in the field of network monitoring. Not all of these were born with monitoring purposes in mind but, nonetheless, can be used to check on devices and hosts health.

We will start with one of the most widespread protocols we will review, SNMP.

What is SNMP

We already talked elsewhere about what is SNMP, so we will keep it brief here. SNMP (Simple Network Management Protocol) has been around for a long time. The first version was officially requested for comments (RFC) in 1988. With this advantage in terms of time, it is only natural that SNMP became the de-facto standard in monitoring and configuring networks.

Its concept of traps, asynchronous notification from agent to manager, caught on quickly. SNMP was also responsible for popularizing the idea of an agent-based protocol. SNMP is defined by the IETF (Internet Engineering Task Force) and since its inception has gone through three major versions. The second is still largely used, despite being less reliable and secure than the third.

How SNMP works

The protocol works with the concept of managers and agents: the manager collects the metrics from the various agents. These can either send the diagnostic info themselves, in an event-based manner, by using SNMP traps, or wait to be polled by the manager. In this case, the manager emits an SNMP Get command to ask for info from one or more of the agents spread on the network. SNMP uses UDP for both, port 161 for get and 162 for traps.

Security-wise, SNMP is not the strongest protocol out there. Initially only “community strings”, pre-established strings that acted like passwords, were implemented. They were sent in plaintext over the network, raising far more than one security concern. The second version of the SNMP protocol has introduced a basic username/password authentication that helps in ensuring that the communication is between the right agents and managers. This has done little to improve the actual security of the data itself, which are largely transmitted in clear, unless the latest version of SNMP is used, which uses authentication and encryption.

The main power of SNMP lies in the large range of data that agents can collect on the devices they are present on. The variables accessible through SNMP are organized in hierarchies, which can be custom-defined. These hierarchies are defined as MIB (Management Information Base), describing the structure of the management data on a system. These are made of various OID (Object Identifiers), each a variable that can be read and sent by SNMP agents. OIDs are not specific to SNMP, but an international standard way to define any object or concept in computing.

SNMP use cases

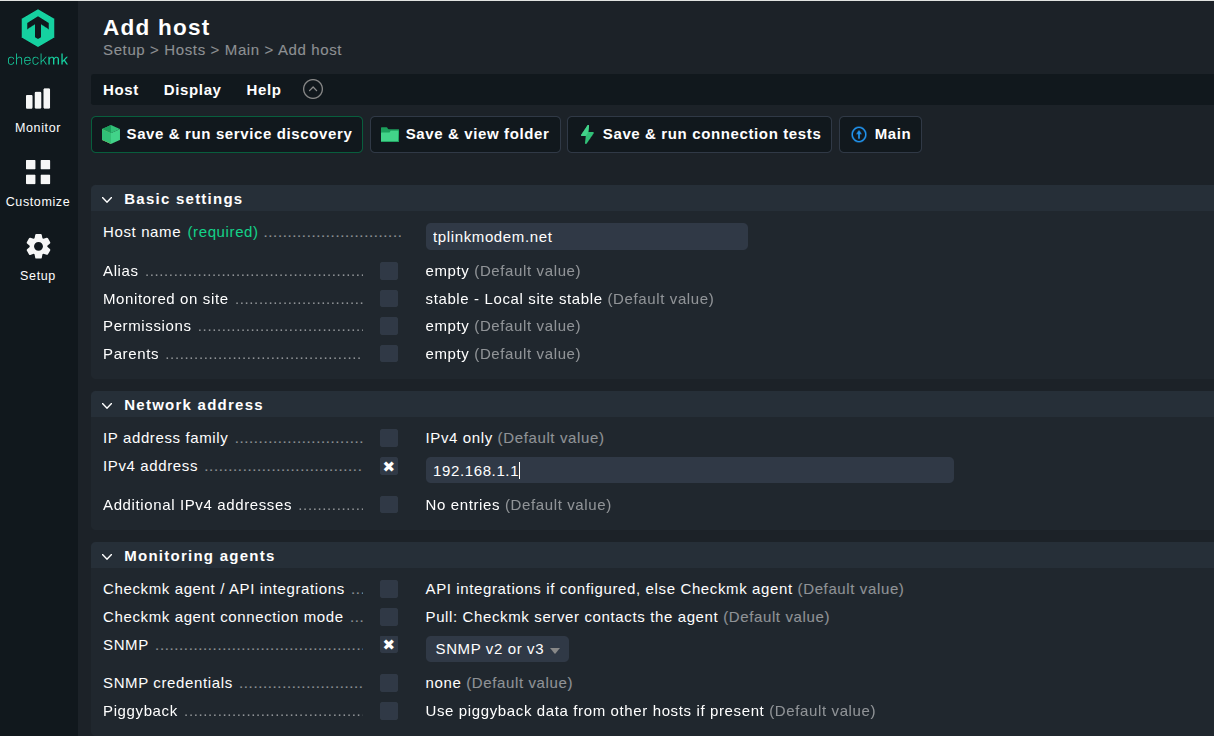

The main power of SNMP lies in its ubiquity. It is much easier to monitor devices that do not require anything to be installed on, as they already support the protocol. With other monitoring protocols, this is rarely the case, as some either work only on some operating systems or are not pre-installed by vendors on their devices. SNMP is the easiest to set up in many cases thanks to its support from companies on their network devices.

SNMP traps may be the most known mode, but in practicality it is the polling-based monitoring, the get, what SNMP is most used for. Both have their use, obviously, and different advantages.

SNMP allows the administrator to retrieve and be alerted about metrics like cooling, voltage, temperature and others that are often not reported by other monitoring protocols. In server monitoring, knowing the health status of the hardware is vital to maintain a working infrastructure.

More commonly, SNMP allows a constant flux of metrics to be collected over numerous hosts. These metrics can be centralized and later analyzed, or checked in near real-time by a monitoring tool that supports SNMP. Being very comprehensive, SNMP is frequently the first choice of network administrators to start monitoring.

What is Syslog

Syslog's main scope is collecting logs on Unix-like systems. Unlike most other protocols in this article, Syslog is platform-specific.

Syslog became the standard for collecting logs from applications and devices on Unix and its descendants. It was not born to do it, though, as it was developed by Eric Allman in the early 1980s to provide a log system for the e-mail server Sendmail. It worked so well that other Unix applications started to support it, and the dominance on those systems of Syslog began.

Nowadays, it is present on many Unix-like systems in one of its evolutions (Rsyslog, syslog-ng). In recent years, it is being slowly superseded by systemD’s journal.

How Syslog works

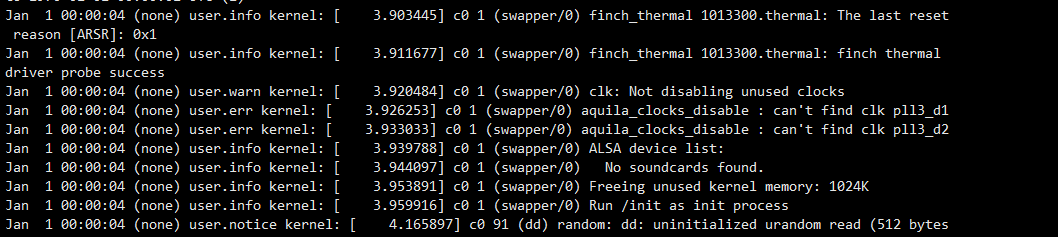

Syslog transmits logs. It takes a source and destination, both configurable, and sends what it receives from services and applications from the former to the latter. Practically speaking, a log message (defined in RFC3164) is created, containing two parts: the TAG and the CONTENT. This was later modified by splitting the TAG part into multiple ones, but the core concept is that the TAG contains the name of the process or program generating the message, and the CONTENT the details of it.

Syslog is not just there to pass logs around, but it is also a system to categorize and then parse the logs. Syslog introduced a numeric code from 0 to 23 called “facility” that is attached to every log and identifies its origin. 0 being kernel-level messages, 1 user’s messages, 2 from the mail system and so on. This allows administrators to easily filter logs and find what they care for more quickly.

Another numerical code, this time going from 0 to 7, represents the criticality. With zero being the most critical, labeled as “emergency”, while seven is just used for debugging.

Filtering of logs, albeit rudimentary, is also internally supported by Syslog. Regular expressions can be used to cut through the bulk of logs to find what exactly is necessary for monitoring purposes.

Syslog use cases

Syslog deals with logs, which often come with a larger range of data and are by default historical. It is thus more powerful than lots of network monitoring protocols. When needing a large historical view, with plenty of detail, a log management software like Syslog fits the bill.

These logs can be received from many processes and sent to various destinations. Commonly either to a local file or a remote Syslog server. For local debugging, a console destination is also available. Developers and administrators alike use this feature to quickly determine the cause of a service or application’s malfunction.

Troubleshooting specific services by perusing logs is one of the many tasks of administrators. Syslog creates a space where those logs can be collected and analyzed.

It is not always rosy, though. As Syslog was born out of a single application at first, and many others started supporting it on their own, without a standard to follow, and thereby outputting logs that deviate from the original format. The different ways an application creates logs is the greatest pitfall of Syslog. It is complicated to analyze logs that are all slightly differently formatted from each other. What originally was a way to unify the messages of each application failed as every developer went their own way.

This does not make Syslog useless, however. It just makes it more complex to find the info necessary for monitoring with it. Various implementations followed the original one, trying to correct the issues and improving the protocol. Nowadays, Syslog is slowly becoming deprecated on many Linux systems and will be superseded by systemD’s journaling capabilities.

What is ICMP

ICMP is a protocol developed in 1981, used to exchange information about the status and errors in IP networks, or check the connections to IP destinations (ping).

It is an integral part of the larger IP protocol, operating on the network layer of the OSI stack.

How ICMP works

ICMP uses IP as a communication base by interpreting itself as a higher layer protocol. This means that ICMP packets are encapsulated in IP packets.

The type of the ICMP packet is specified as an 8-bit number at the beginning of the ICMP header. Then the ICMP code is specified by the following code field, which is also 8 bits long. The combination of type and code classifies the ICMP packet. In theory there could be 256 such ICMP packets, but most are deprecated or unassigned. 43 are actually classified. Of these, only a few combinations of type and code are commonly used.

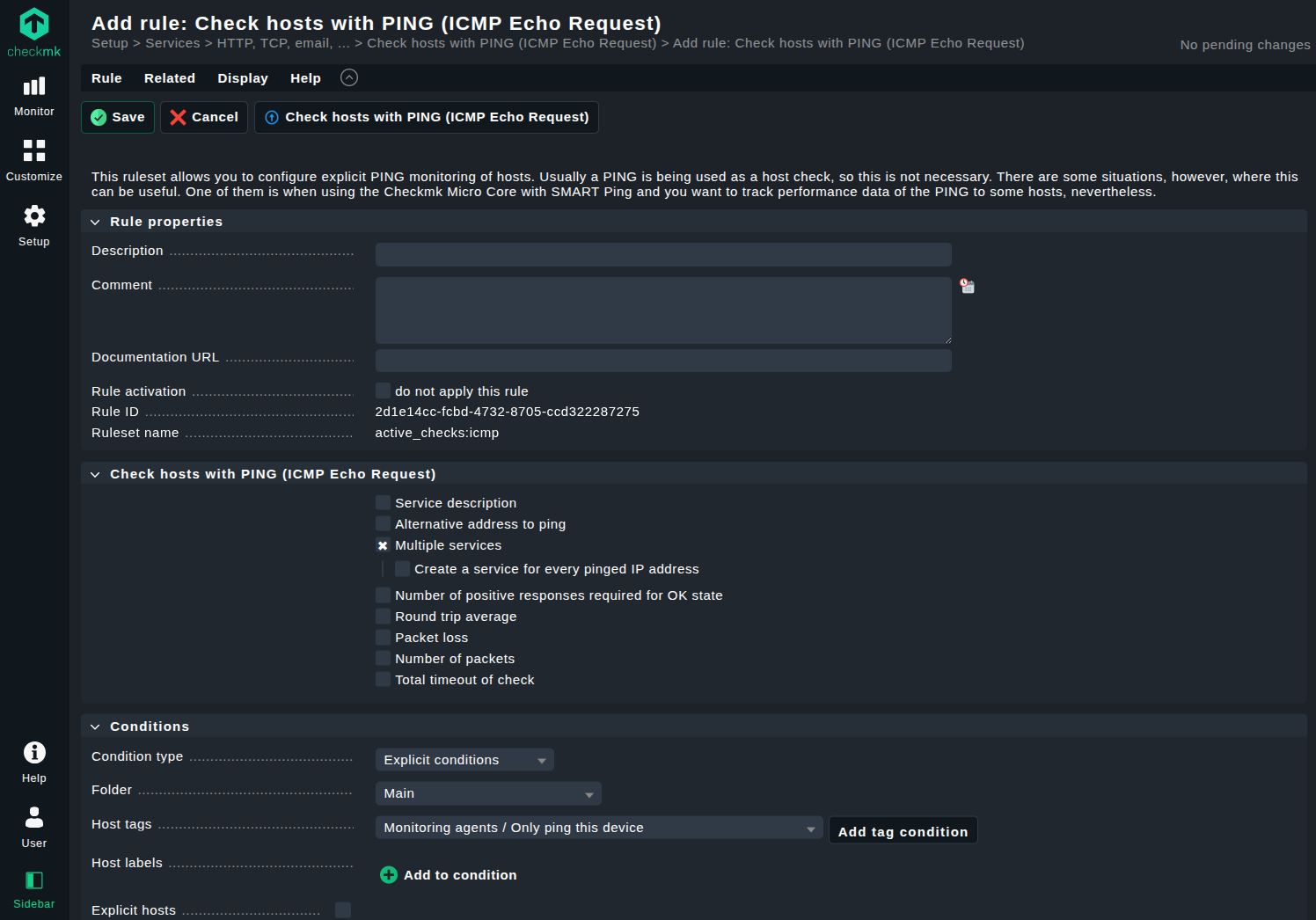

Commonly used are type 8, code 0 (Echo Request) and type 0, code 0 (Echo Reply). These are commonly used by the “ping” utility, and referred to simply as “pinging”. They constitute a method to check if a network host is alive and responding, or down or not responding. The Echo Request message is sent in an ICMP packet to an IP address. If that address is alive and enabled to reply to this type of requests (often they are blocked at firewall level), the answer will be an ICMP Echo Reply. So everything works. If no answer is received, usually the host is down or ICMP requests are dropped by a firewall.

If, on the way to an address, a host cannot find a route to it, it replies with a ICMP Destination Unreachable, type 3, code 1 message. There are 15 more codes within the type 3 ICMP messages, each specifying the reasons for a host not replying. By analyzing this code, basic network monitoring can be done through ICMP.

Another big part of the ICMP usefulness is the command line tool “traceroute” (or “tracert” on Windows systems). It allows tracing the path from a source to a destination through the use of specific ICMP packets. Every host encountered before the desired destination lowers a number, set by traceroute to 1, in a field of the IP header (the TTL, Time-To-Live, one), and when this number reaches 0, the path is considered interrupted or too far. Through this method, information about which stations are passed, response time of each, and whether certain routers are not reached can be had. Problems like this or data loops can be thus identified.

ICMP use cases

From the above, a main use case can be easily understood. ICMP is used to ping hosts over a network and depending on how they reply, issues can be identified. As far as monitoring networks is concerned, that is the main use of ICMP.

Secondly, but no less important, the traceroute utility uses ICMP to identify the shortest path to a destination, know the latency that it takes to reach it, and discover routing problems.

ICMP, with ping and traceroute, is thus a simple yet powerful way to quickly and efficiently discover what is working and what is not in your network. Bottlenecks in the infrastructure can be discovered through the traceroute command, as a congested host may not be able to properly reply to an ICMP request. The problem can be brought to light in a few seconds with a single command.

One of the limitations of ICMP is that the “why” of a network issue is often only implied, not specifically expressed. The protocol is not as complex as others and more focused on quick troubleshooting rather than the extensive collection of metrics. Further investigation is necessary to understand the causes of an issue. But despite this, ICMP remains an invaluable source of insight for any network administrator.

What is NetFlow

NetFlow is a moderately ancient technology for network monitoring, developed internally at Cisco in 1996. It collects IP traffic as it enters and exits an interface. Same as SNMP, it is specifically a network monitoring protocol.

Until version 5, the protocol pertained to Cisco devices only. Today, it is present on many other network vendors’ routers and switches in their own implementations. These roughly follow Cisco’s original version while using various names (Jflow, NetStream, Cflowd, Rflow and more). NetFlow can in part substitute SNMP, as it is widespread on many network devices like routers and switches, even if its scope is different.

How NetFlow works

NetFlow’s architecture is based on the assumption that a “collector” exists to receive info from the various devices and analyze them. This collector is usually a server running in the network where the NetFlow-enabled routers and switches are. An “exporter”, typically an agent installed on a network host, takes care of aggregating packets for the collector to collect later. A third element, the analysis application, is in charge of analyzing the flow of data gathered by the collector.

NetFlow defines a flow as a sequence of related packets, from a source to a destination. Every flow has seven values, starting from the source and destination IP addresses, the IP protocol version used, the source and destination ports (for TCP and UDP), and the IP Type of Service. These flows are thus a summary of a connection, a flow of data from somewhere to somewhere else. By collecting and analyzing these flows, a network administrator can identify bottlenecks, disturbances, and larger issues in a network.

NetFlow use cases

It is easy to see how NetFlow provides an “eagle eye’s view” on what is going on inside a network, where packets come from, where they go, and where they get stuck. Unlike more in-depth network monitoring protocols, NetFlow gives an overview-like set of metrics that are of great use to know where attention must be paid to fix or improve the flow of data.

Not only that, though. More generally, NetFlow is useful to learn where most of your traffic is going to and from. This can highlight where additional capacity is necessary to relieve devices from a too heavy burden.

NetFlow can help administrators know what host is using the most bandwidth, and for what purpose. A host streaming videos for private use can be quickly discovered by analyzing NetFlow’s flows, and adequate measures can be taken. Companies that need to calculate the network usage for billing, for example, can exploit NetFlow to precisely know how much data has been used by each host.

Ultimately, NetFlow can lend a hand in predicting future usage by keeping track of past traffic. Together with knowing what host is struggling to cope with the current flows, NetFlow is an invaluable help in capacity planning.

What is sFlow

sFlow stands for “sampled flow”, and the name itself reflects what the protocol does. It is an industry standard protocol for packet export at layer 2 of the OSI stack.

Originally developed by InMon Corp, sFlow is today maintained by the sFlow.org consortium, which continues to develop the protocol’s specifications. Currently, the protocol is supported by multiple network device manufacturers, except for network management software vendors.

How sFlow works

Unlike NetFlow, and despite the name, sFlow has no concept of “flows” but works by sampling packets received on an OSI layer 2 device (like a switch or router with an installed sFlow agent) or above. sFlow operates thus on a partial image of the traffic, whereas NetFlow collects almost everything.

What sFlow actually does is perform two types of sampling. One is randomly sampling packets or application layer operations, and the other is a time-based sampling based on counters. These are referred to, respectively, as flow samples and counter samples.

The actual sampling is accomplished as follows: whenever a packet arrives at an interface, the sFlow agent takes a decision on whether to sample it or not. The decision is based on a counter that is decreased with each packet. When that counter reaches 0, the sample is taken and sent to a central server as a sFlow datagram for analyzing. This is the sFlow collector, equivalent to the NetFlow one.

The sample rate of packets is defined by the administrator. Depending on the desired accuracy level, a larger ratio of sampled packets can be configured. This clearly increases the generated traffic for an increased level of data.

sFlow data is sent as a UDP packet over port 6343. The possible loss of data due to the use of the UDP datagrams instead of TCP packets is considered acceptable.

sFlow use cases

The main use case of sFlow is to analyze high performance networks to observe problems in abnormal traffic patterns. sFlow exists to make these patterns easily and quickly visible to the administrator, so they can be diagnosed and corrected rapidly.

Continuously monitoring traffic flows on all ports makes sFlow ideal to spot congested links, identify the source of traffic, and the associated application level conversations.

In security and audit analysis, sFlow helps by building a detailed traffic history, establishing thus a baseline for normal behavior. Deviations from this can then be effortlessly identified through an audit.

As with NetFlow, sFlow can provide info on the usage of bandwidth of network peers. When an extreme level of accuracy is not required, sFlow can substitute NetFlow effortlessly.

What is IPFIX

IPFIX (Internet Protocol Flow Information eXport) is the direct successor of NetFlow. It was born out of the need to have an open protocol to export the flow information from supported devices. Cisco owns NetFlow and while many other vendors implemented it, it is still a proprietary protocol. IPFIX was standardized by the IETF on the basis of version 9 of the NetFlow protocol, making them quite related.

IPFIX is a standard that defines how IP flow information should be formatted and transferred from exporter to collector.

How IPFIX works

IPFIX works similarly to NetFlow, but has a few more steps. A series of Metering Processes are present at one or more Observation Points, kind of like a NetFlow collector. These processes aggregate and filter information about observed packets, creating the flows. An Exporter gathers each of the Observation Points into a larger Observation Domain, and sends the flows through IPFIX to a Collector. The collector, ultimately, takes care of analyzing the collected flows.

There is no limitation to how many exporters and collectors exist and how they are interconnected with each other. Multiple exporters can have a single collector, or a pool of collectors can receive data from a lone exporter.

In IPFIX, each sender periodically sends IPFIX messages to the configured receivers. Unlike other “flow-based protocols”, IPFIX can use both TCP and UDP, but prefers SCTP (Stream Control Transmission Protocol), a reliable transport protocol with built-in support for multiplexing and multi-streaming.

IPFIX introduced the formatting of the flows through the use of Templates. The protocol is extensible, leaving the user to define custom data types to include in IPFIX messages. Templates are particularly useful when dealing with different vendors' implementation of the protocol.

IPFIX use cases

IPFIX has the same use cases as NetFlow. Bandwidth monitoring, discovering resource bottlenecks, and predicting future usage are all well within the scope of what IPFIX can provide.

IPFIX improves this over NetFlow by the usage of SCTP, which is a protocol that is more reliable and meets more security requirements than TCP and UDP, which NetFlow uses. In networks where congestions are frequent and increased security is necessary, IPFIX is the preferred protocol.

Hardware vendors can specify their own Vendor ID to be exported into flows and recognized by IPFIX. This can help to filter the data more efficiently.

Being an open IETF standard, IPFIX is more easily supported and extended in the future than a proprietary protocol as NetFlow. For future-proofing a network monitoring solution, IPFIX may be a desirable choice.

What is SSH

SSH stands for “Secure Shell Protocol”, with its main intent already clear from the name. In 1995, it was developed to provide a secure alternative to login protocols like rlogin, Telnet and FTP. These worked well but had no concept of security whatsoever, creating security risks that, while originally considered negligible, proved to be unsustainable. SSH’s aim was not monitoring devices but logging into them, with monitoring as a possible task to be accomplished after login.

SSH was rapidly adopted on all main operating systems, including embedded systems, and network devices. As an open protocol under IETF, it has given birth to a series of derivations, like SCP (for file copying), SFTP (a more secure FTP), FASP (for data transfer) and others.

How SSH works

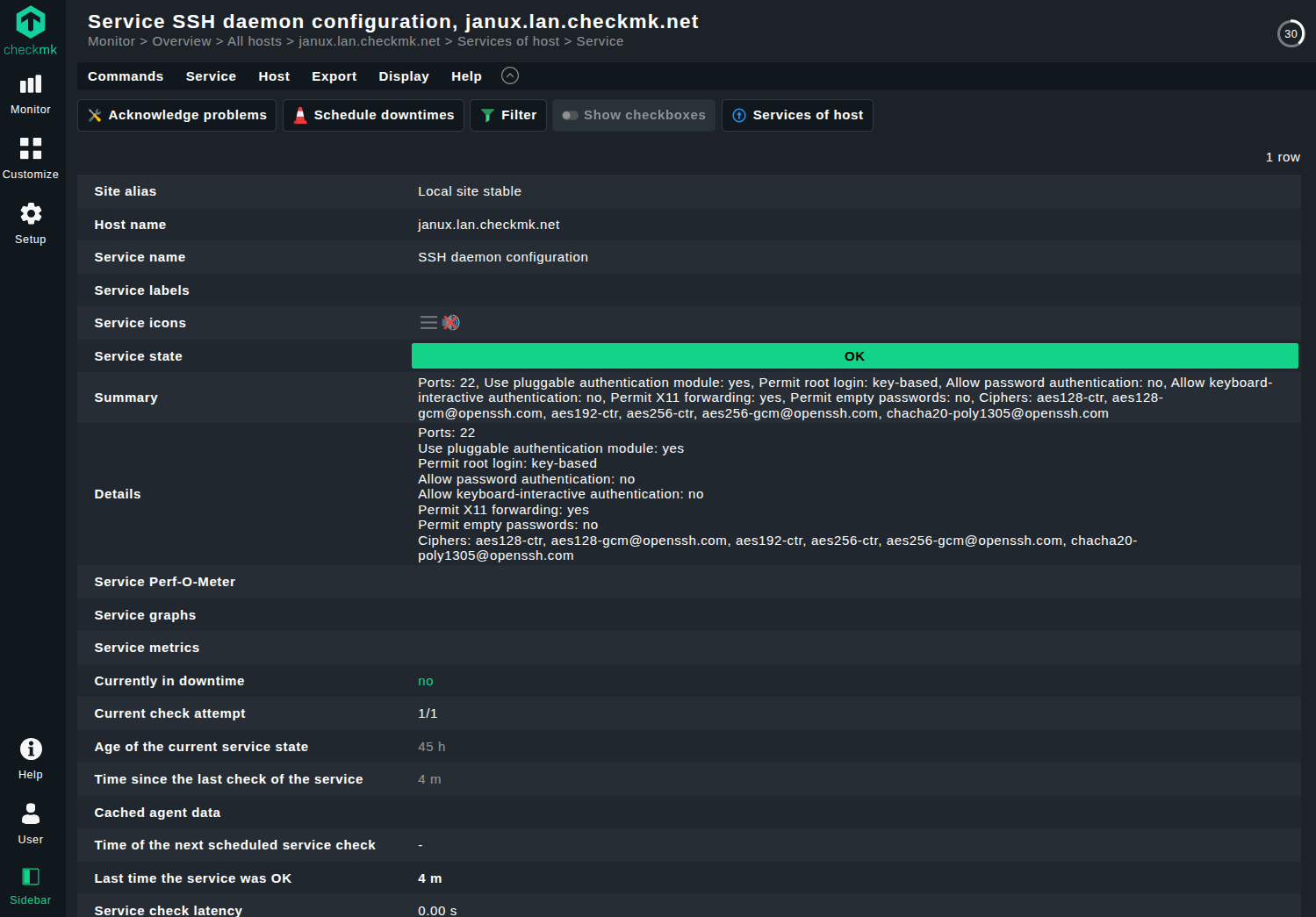

SSH’s task is mainly to allow accessing a remote resource and doing so securely. A series of authentication methods are supported to give the administrator privileges on a remote host.

Simple user/password authentication is supported and the easiest, but less secure, method. More commonly, SSH logins are authenticated through the use of public key authentication: a cryptographic pair of keys is generated locally and sent to the SSH server. At login, the keys are exchanged and, if matching, the access is granted.

Similarly, host-based authentication uses cryptographic keys as well, but on a host’s level rather than a user’s. Keyboard and challenge-response authentication methods use a single challenge-response process that requires the user to interactively enter a password. Kerberos enabled machines can be accessed through SSH’s use of the GSSAPI authentication. Any of these methods can be enabled and disabled in the configuration file on the server. It is advisable to only leave the ones strictly necessary enabled, to reduce the possibilities of intrusions.

Once access is granted, a shell is opened on the remote resource and can be used as it was a local one, with privileges depending on the user role on the server.

SSH use cases

SSH, unlike monitoring protocols, does not collect data for you. It only provides a secure way to access remote resources, granting a high level of privileges. Therefore, SSH is not a network monitoring protocol, but is nonetheless extremely useful in troubleshooting systems.

Access with full privileges to a misbehaving host allows the administrator to have full control over that host, analyze what is happening within it from a point of observation that is not limited by a protocol's capabilities. As anything that can be accessed on the remote device can be accessed through SSH with enough privileges, operations such as reconfiguring a failed database, or resetting a firewall, or fixing an incorrect installation can all be effectively done.

SSH grants great powers to the administrator. While being a mostly manual protocol, where nothing like polling, sampling, or automatic analyzing exist, SSH is to be used when delving deep into a device’s internals.

With great power comes greater responsibilities, as well-known. And SSH makes no exception to the rule. Being in full charge of remote devices means that misconfiguring them is an easy mistake to make. Other monitoring protocols like SNMP or the flow-based ones are less prone to these errors. SSH also requires a longer set-up to start working. Keys must be exchanged, user accounts created and more. Installing SSH itself is easy and well-supported on all major operating systems, but setting it up is not as fast as an agent-based monitoring protocol is.

What is WMI

WMI stands for Windows Management Instrumentation and is an extension of the Windows Driver Model. It provides an interface to gather information about a Windows host, plus an API to extend and control it through scripting languages (VBScript and PowerShell).

It is Microsoft’s implementation of the Web-Based Enterprise Management (WBEM) standard. WMI therefore belongs to a family of implementations that work on various operating systems, but WMI itself is Windows-specific.

How WMI works

WMI operates in a logically similar way as other agent-based monitoring solutions. A component manages the namespaces and provides info to WMI consumers, much like a server polls a remotely installed agent. WMI components have been present on all Windows systems since 2000, and can be installed on previous versions.

WMI needs to be set up on the component’s side. This requires a multitude of open ports on the host to be monitored. Since it depends on other Windows services such as NetBios, SMB, and RPC being open, all their relative ports must be accessible from the WMI consumer. WMI itself uses a dynamic range of ports, which can be anything between 1025 and 5000 (on Windows 2000, XP and Server 2003) or 49152 and 65535 (on Windows 2008, Vista and later). It can be configured to use a fixed port, though.

Once all is set up, WMI provides an infrastructure to discover and perform management tasks. It is a management protocol, not just a monitoring one. Data from WMI is obtained through the use of scripts or full applications, using various languages (ActiveX, PowerShell, Visual Basic, C++, C# and others). These applications connect to the WMI provider and then queried either via PowerShell or with WQL (WMI Query Language), a subset of SQL specific for WMI.

WMI use cases

WMI is not the most straightforward of the protocols, as easily guessed by the previous chapter. This is because the protocol exposes a great deal of data and allows for configuring and maintaining Windows hosts, encompassing features that are both of SSH and classic monitoring protocols such as SNMP.

WMI is thus quite powerful. It can be used for filesystem monitoring, gathering statuses of computers, and configuring settings to a granular level that is hard to replicate with other protocols. It is common to use WMI to configure security settings or system properties on a remote host.

As with SSH, WMI can run applications and execute code remotely as if it were local. Logs can be accessed through WMI, providing a primitive alternative under Windows to a Syslog client/server setup.

Data about network interfaces, metrics on network traffic and what else may affect your infrastructure are all exposed through WMI, which can then be used to monitor traffic on a Windows network.

All these possibilities are accessed via a combination of scripting or using the WMI command-line interface (WMIC). Be aware of the implicit risk of setting up the monitored systems with too generous permissions for WMI: the more is accessible to an administrator, the more accessible is for an attacker too.

A last word of advice. Microsoft was aware of the disadvantages of WMI, like heavy resource usage and complex setting up, and worked on improving it in the last years. The result is MI (Management Infrastructure), that simplifies interacting with WMI providers by introducing a new API, using XML to create cmdlets in PowerShell to automate monitoring, and generally enriching the PowerShell semantics related to WMI. MI is retro-compatible with WMI, and suggested being used if you have never set-up WMI before.

What is RMON

RMON (Remote Network Monitoring) is an extension of SNMP, originally developed by IETF, to monitor OSI layer 1 and 2 information on Ethernet and Token Ring networks. These were not supported by SNMP itself. Later versions of RMON extended it to include network and application layer monitoring. A similar version of RMON called SMON (Switch Monitoring) supports switched networks. For high-capacity networks, HCRMON is an extension of the basic RMON protocol.

RMON is present on many high-end switches and routers as an additional protocol alongside the SNMP agent.

How RMON works

RMON is not particularly different from SNMP. Agents under RMON are called “probes” and act as servers, collecting and analyzing the info to be sent to the network management applications that support the protocol. These act as clients in a server/client relationship.

Often SNMP-enabled devices only require a software to be installed to become RMON-enabled too, while others may require a hardware module called “hosted probe” to be physically implemented.

However, when RMON is enabled, it works by monitoring the traffic going through the network device it is installed on, monitoring congestion, dropped packets, and excessive collisions. Generally speaking, a RMON probe is present on only one device per subnet. The data collected by the probe can be used by an SNMP agent or can trigger an SNMP trap when specific conditions are met. There are a total of nine groups of RMON monitoring elements that cover various aspects of traffic that RMON can monitor. These groups go from network statistics, to the frequency of the data being sampled, to addresses, to ranking hosts according to traffic size and usage.

RMON use cases

Generally speaking, RMON has the same use cases as SNMP. RMON probes have more responsibility for data collection and processing, removing some of the burden from the clients. This means that less traffic is generated on the network compared to classic SNMP, which may be advantageous in congested networks.

RMON is designed to be “flow-based” instead of “device-based”. It gathers more metrics about traffic rather than individual devices. In this, RMON is akin to NetFlow and sFlow. RMON is thus chosen when it is desired to predict changes in traffic patterns.

Asset tracking, remote troubleshooting and mitigation almost in real time are all possible with RMON. The protocol does not support traps like SNMP, that is why we say “almost in real time” but setting up a frequent update from the probes is not overtly complex.

One issue of RMON is that more is delegated to remote devices, where the probes are installed, increasing their resource usage. This can put a strain on already burdened switches and routers, for example. Often this issue is circumvented by implementing only a subset of the RMON protocol, focusing more on alarms and event-based monitoring capabilities.

What is Suricata

Suricata is an open-source intrusion detection (IDS) and prevention (IPS) system developed by the Open Information Security Foundation (OISF). The first release came out in 2010, available to all major operating systems.

An intrusion detection system detects and alerts about possible threats to a system. An intrusion prevention system also takes action, like blocking the traffic that may present a threat. Suricata includes both.

How Suricata works

Suricata monitors packets on network interfaces. Once installed on a host that may be subject to any type of threat, it starts capturing packets for analysis. These are matched with a list of signatures, also called rules. Each rule is a simple file containing a header (defining the protocol, IP addresses, ports and direction of the rule), a set of options, and an action to take when the signature matches.

The actions are firewall-like: a matching packet can be dropped, rejected, let through, generate an alert and so on.

Suricata comes with numerous rulesets to get started without having to implement your own from scratch. The rules are the core of Suricata and work as both an intrusion detection and prevention system.

Suricata use cases

From the above, it is clear what Suricata is used for. Wherever there is a risk of intrusions, attacks, DDoS and other types of threats to a network, Suricata can be useful. It can be used to monitor a plethora of protocols, from classic TCP/IP traffic to FTP file transfers, from flows to SNMP.

A real-time system of alerts in case a suspicious packet crosses into your infrastructure is a typical way to implement Suricata. The default operational mode of Suricata is passive, detection only, and that suits this use case.

Suricata is also helpful in creating a geographic breakdown of the traffic entering and leaving a network. Together with a SIEM tool, the Suricata logs can be fed to it to understand your traffic distribution.

Conclusion

We have looked at the most common network protocols that in one way or another are used for monitoring, configuring, securing, and maintaining network infrastructures. The choice of one vs the other dependents on your use case and the requirements of your company. Clearly, lots exist and aim at different objectives. Using them all just to cover every edge case may be wasteful, depending on the value of each case.

It is easier to use a single monitoring tool like Checkmk instead. Checkmk allows a comprehensive coverage of your infrastructure by supporting all the necessary monitoring protocols. It can make your life simpler by providing a clear and user-friendly interface between the administrator and the various protocols mentioned here, without compromising accuracy and completeness.